Have you ever had the joy of carrying out acceptance tests? For our team at Delicious Brains, testing our releases, in the past, has been one of the most dreaded tasks on the to-do list. We hold our plugins to a high quality standard so it’s a must but manual tests are brain-numbingly tedious and can take hours of expensive developer time.

Recently, we decided it was high-time to fix that.

Enter, me + my beginnings of automating the acceptance testing process.

Will it work? Will it save us from hours of brain-numbing manual tests? Will we be better off? Will it all be a fruitless effort?

Read on for more about how the automation of testing our plugins ahead of release is shaping up – including how we manually tested in the past and a look at some of the automated acceptance tests we’ve already implemented.

Be warned, that is not a tutorial! Acceptance testing can be quite a complex thing, so while I’ll show some code it’s far from complete and primarily here to give you a taste of how we set things up.

What is Acceptance Testing?

So what the devil is acceptance testing and why do we do it?

For Delicious Brains, acceptance testing is the process of testing the final build of a forthcoming plugin release to make sure it’s ready for our customers. In other (larger) companies it’s when the “customer” or their representative tests the release candidate and hopefully signs-off on it, “accepting” the release. Once accepted, it’s released into production (or in our case uploaded to our server and released to our customers).

Acceptance tests are very different to unit tests in that you’re testing the software from the customer’s point of view, trying all the actions and scenarios that a customer should and shouldn’t be able to accomplish. When unit testing, you’re testing the individual classes and functions that make up the API for the software. When acceptance testing, you’re clicking buttons, links, inputting text, reading prompts and generally interacting with the software as a user would.

Without acceptance testing, you can’t be sure that the software will work as expected when being used by an end user. It’s the best way to ensure that you have a quality product fit for your customers.

The Manual Method

In the past 3 years, I’ve taken part in many releases of WP Migrate DB Pro and WP Offload S3. Each and every one includes an excruciating period of testing the release. No one in the team likes testing a release as it’s a painfully slow, manual affair, running through an ever-growing group of scripts that detail each step of the tests.

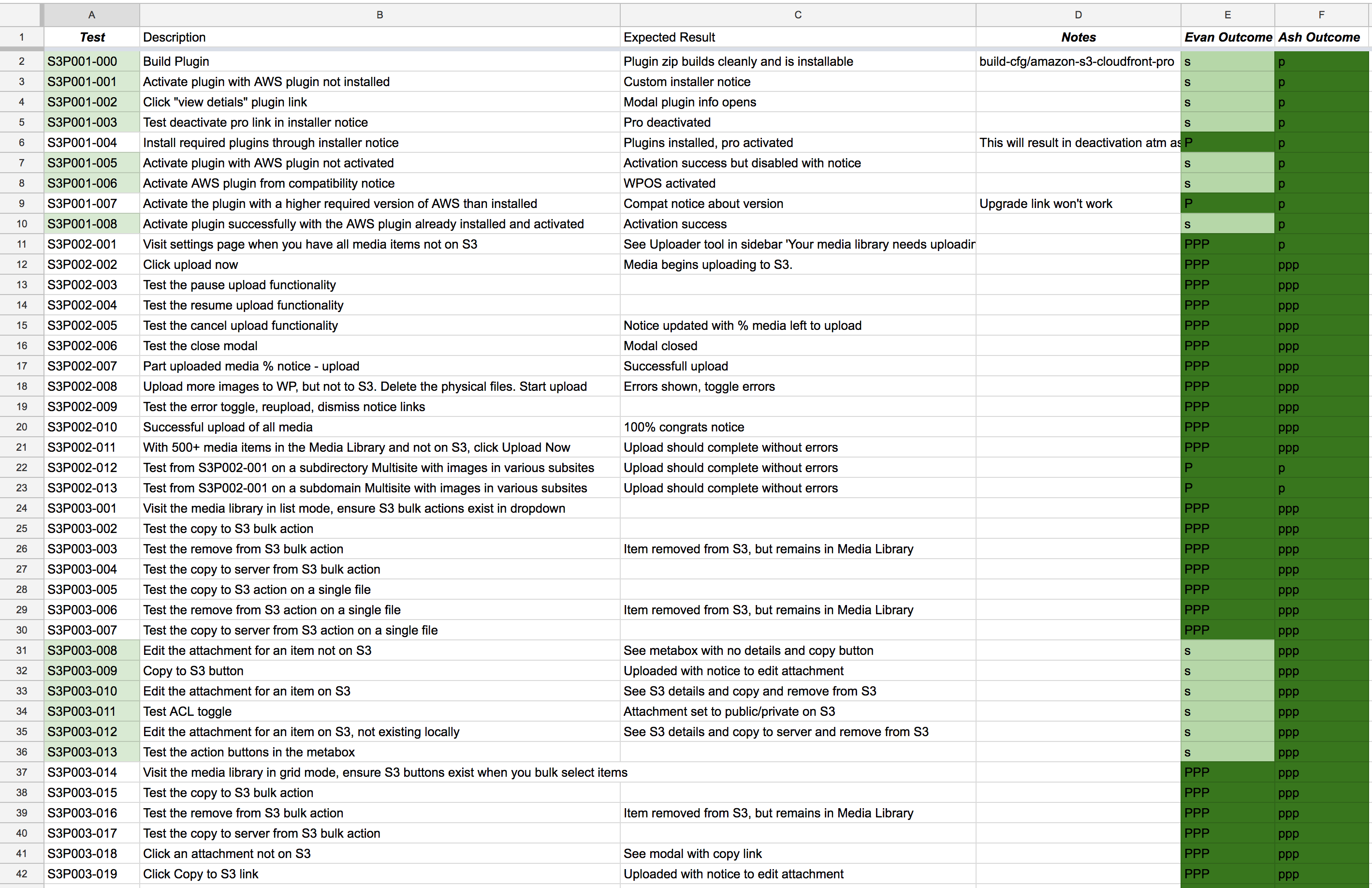

The above screenshot shows a small portion of the release testing script we normally use for WP Offload S3. The script has 116 manual steps which often require further manual checks of database data or objects on S3. If a test fails, you put an “X” in your “Outcome” column which turns the cell red, or a “P” if it passes which turns the cell green.

You see those “PPP” cells in the screenshot? Further down the script there are two lines that tell you to go back and do all those steps again for domain and subdirectory based multisite installs! Soul-destroying.

We also have scripts for our Amazon Web Services, WP Offload S3 Lite and WP Offload S3 Assets addon plugins, as well as the Easy Digital Downloads, WooCommerce, Enable Media Replace, Meta Slider, Advanced Custom Forms and WPML integrations.

And that’s just the WP Offload S3 related scripts. WP Migrate DB Pro also has a suite of scripts for testing WP Migrate DB, WP Migrate DB Pro, and the Media Files, CLI and Multisite Tools addons.

When testing a release, 2-3 developers are tied up for approximately a full week running through all the tests for all the components of the product. Often we use a “chase” format where one developer kicks off the testing, usually starting with the “Lite” version. Once any issues have been addressed for the Lite plugin they move onto the “Pro” version with a second developer starting on the “Lite” testing. If a 3rd developer is required a volunteer is called for from one of the other plugin teams so that someone not as familiar with the feature release gets to “kick the tires” and start testing the Lite version after the 2nd developer has moved onto Pro. Sometimes on a smaller bug fix release, we’ll do a “cross-over” format, with the first dev starting at one end of the scripts and the second starting at the other end, crossing over in the middle to save some time.

As you can probably tell, that’s not a popular part of the release cycle for our plugins. The development period can drag on a bit longer than it probably should too as we feel a bit of anxiety about having to go through the release tests and instead find Very Important Things (cough excuses cough) that must be done before release testing can start. This can cost a lot of expensive developer time, but quality is incredibly important to us as a company so this level of testing is required. In the past, we’ve struggled with hiring professional testers that can handle our very technical products. If you’ve had success yourself, we’d love to hear about it in the comments!

At our company retreat in Vienna last year, Brad brought up a presentation on testing he’d recently watched, which lead to a big discussion about the problem. We decided to give automated acceptance testing a go to relieve some of the burden. I volunteered to kick things off for WP Offload S3, to much rejoicing!

How We’re Automating

Technology Selection

We’re using Codeception to drive our acceptance tests. Some of our team already had some experience with Codeception, and frankly there aren’t really any other options if you want to write your tests in PHP, so it was an obvious, if not the only choice.

The biggest problem any of us had had with Codeception in the past was in setting up the Selenium or PhantomJS servers required for running the tests. Selenium and PhantomJS provide headless web browsers that can be driven through an API to connect to your test website and respond to commands such as “click” to interact with links and buttons, enter text or report the contents of fields or the DOM, run JavaScript and so on. These servers can be a bit tricky to set up. They can also require Java or other dependencies that you may not want to install, and ideally need to be running in the background all the time you’re likely to be running your tests.

Another issue with running acceptance tests is ensuring you have a test website or two that are in a known clean state. Otherwise, you can’t be sure that a problem with your tests isn’t down to a dodgy test website.

To address the above potential issues I opted to run the entire test process within Docker containers so that each run has a consistent environment that can be automatically spun up in seconds.

Docker Compose

To keep things relatively flexible we’re running a few containers that work in unison, for that we use docker-compose to keep things straight.

The above gist contains the current docker-compose.yml file for WP Offload S3’s acceptance tests. I’m not going to go into great detail here, but as you can see there’s a combination of data containers that provide consistent mount points for the other web server, database server, Selenium/PhantomJS servers, test runner and plugin build services. Some services depend on others in order to function, so for example the default acceptance-tests service that runs the Codeception tests links to the web-server and selenium-server-chrome services as it can’t do anything without those being up and running.

Above you can see the structure of the docker folder within the WP Offload S3 repository. We have custom Docker images that we build for the data, php-cli and php-server related services, which are needed to satisfy the requirements for running WordPress or Codeception, or for building our plugins.

Our acceptance tests rely on zip files for the plugins being available for two reasons: so we can test installing the plugins and so we’re actually testing the product our customers will get. So as a little bonus I created a docker/build-plugins.sh script that builds our plugins via a container, meaning a developer doesn’t even need to install things such as grunt, yarn or compass on their machine. It sets us up for running the acceptance tests on a dedicated build server, or maybe via Travis CI like we do our unit tests.

There’s a few other convenience scripts in the docker folder, but the most important script is run-tests.sh.

Running docker/run-tests.sh on the command line is all it takes to run the entire acceptance test suite. You can however give it arguments to switch from the default Selenium/Chrome to something like Selenium/Firefox or PhantomJS. For example, to run the tests with Firefox we’d use:

docker/run-tests.sh -e firefox

In the next section you’ll learn about the most important argument that we use very often when running the acceptance tests, -t.

Codeception

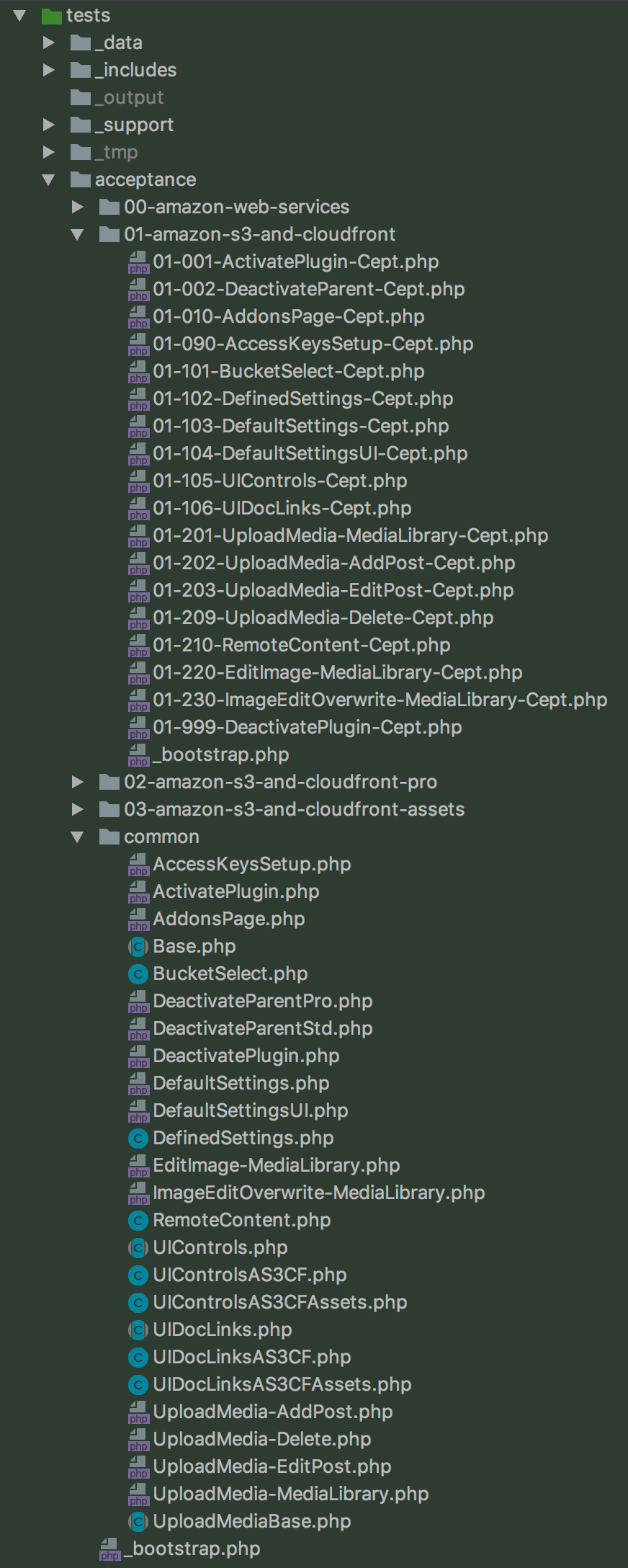

When using Codeception to run your acceptance tests you can structure your scripts into a nice hierarchy of folders and it’ll traverse them in alphabetical order finding anything ending with “-Cept.php”.

We’ve split our acceptance tests into plugin specific folders, prefixing the folders with a 2-digit number to get the order we want. It’s similar inside the folders: each test has a 3-digit prefix to ensure they run in the order we want. The 3-digit prefixes loosely group the test scripts into subject areas such as installation/setup, settings, uploading media, editing media etc.

We can run an entire plugin’s tests on its own:

docker/run-tests.sh -t 01-amazon-s3-and-cloudfront

Or we can run a single test script:

docker/run-tests.sh -t 01-amazon-s3-and-cloudfront/01-002-DeactivateParent-Cept

Most of the scripts depend on other scripts for basic setup prerequisites. For example the acceptance/01-amazon-s3-and-cloudfront/01-002-DeactivateParent-Cept.php script looks like this:

<?php

include '01-001-ActivatePlugin-Cept.php';

include COMMON_TESTS_DIR . 'DeactivateParentStd.php';

It’s using the previous 01-001-ActivatePlugin-Cept.php script for basic set up, and for reusability across the different plugins there’s a DeactivateParentStd.php script in the common folder.

The included 01-001-ActivatePlugin-Cept.php script is also super simple:

<?php

include '_bootstrap.php';

include COMMON_TESTS_DIR . 'ActivatePlugin.php';

It’s that _bootstrap.php file that each plugin folder has that makes the difference, setting up a bunch of variables used throughout the tests. Here’s acceptance/01-amazon-s3-and-cloudfront/_bootstrap.php:

<?php

$slug = 'wp-offload-s3-lite';

$plugin_slug = 'amazon-s3-and-cloudfront';

$name = 'WP Offload S3 Lite';

$prefix = 'as3cf';

$tab = 'media'; $addon_name = 'WP Offload S3 Lite';

$plugin_site = "https://wordpress.org/plugins/{$plugin_slug}/";

$plugin_site_title = 'WP Offload S3 Lite';

$install = true; $parent = new stdClass();

$parent->slug = 'amazon-web-services';

$parent->basename = 'amazon-web-services';

$parent->name = 'Amazon Web Services'; $option_name = 'tantan_wordpress_s3';

$option_version = '5';

$option_version_name = 'post_meta_version';

$default_bucket = 'wpos3.acceptance.testing';

$default_region = '';

$default_cloudfront = 'xxxxxxxxxx.cloudfront.net'; $I = new AcceptanceTester( $scenario );

The most important variable is that $I one at the end, that’s where Codeception instantiates all its magic.

In acceptance/common/DeactivateParentStd.php you can see where $I is used extensively to call Codeception functions:

<?php

$I->assertNotEmpty( $slug, 'slug not empty' );

$I->assertNotEmpty( $plugin_slug, 'plugin_slug not empty' );

$I->assertNotEmpty( $name, 'name not empty' );

$I->assertNotEmpty( $parent, 'parent not empty' ); $I->loginAsAdmin();

$I->amOnPluginsPage(); // Happy path for parent plugin...

$I->seePluginActivated( $parent->slug ); // ... and target plugin.

if ( ! $I->can( 'seePluginActivated', $slug ) && ! $I->can( 'seePluginActivated', $plugin_slug ) ) { $I->fail( 'Could not activate ' . $slug );

} // Parent plugin not installed notice.

global $wp_plugin_dir;

rename( $wp_plugin_dir . '/wp-content/plugins/' . $parent->basename, $wp_plugin_dir . '/wp-content/plugins/' . $parent->basename . '.bak' );

$I->amOnPluginsPage();

$I->seePluginDeactivated( $parent->slug );

$I->see( "{$name} has been disabled as it requires the {$parent->name} plugin. Install and activate it." );

rename( $wp_plugin_dir . "/wp-content/plugins/{$parent->basename}.bak", $wp_plugin_dir . "/wp-content/plugins/{$parent->basename}" ); // Activate parent plugin through notice.

$I->amOnPluginsPage();

$I->seePluginDeactivated( $parent->slug );

$I->see( "{$name} has been disabled as it requires the {$parent->name} plugin. It appears to be installed already." );

$I->click( '#' . $plugin_slug . '-activate-parent' );

$I->seePluginActivated( $parent->slug );

Codeception likes its function names to be readable as actions you are taking and expect to happen as defined. So, in the above code you can see things like “I log in as admin”, “I am on Plugins page”, or “I see plugin activated”.

Well, I say Codeception, but there’s extensive use of the most excellent wp-browser WordPress specific set of extensions for Codeception. That’s how we get to say $I->amOnPluginsPage(); to navigate to the list of installed plugins in the WordPress dashboard without having to know the URL, or $I->seePluginActivated( $parent->slug ); to test that a plugin is active. wp-browser includes many other convenience functions that save us a bunch of code, thanks Luca!

The above code is from some of our simplest tests. We also set up some pretty complex tests that are run from classes that we instantiate with different params for different scenarios to cover all the various ways the functionality being tested can be used by the different plugins with different environment variables, etc. The test suite gets really rather complex, and now tests some areas of our plugins way more thoroughly than we ever did manually, and in far less time.

Here’s what the 25 minutes run of the 11 scripts that make up the acceptance tests for WP Offload S3 Assets looks like compressed to 15 seconds.

The failure was absolutely intentional to give it a little color and you a sense of what an error looks like, and definitely not due an upgrade to our site being run simultaneously as the docs URL was being tested. 😉

A full run of the test suite has 50 scripts and takes about 5 hours to run!

Smooth Sailing Huh?

Setting up the automated acceptance testing hasn’t been without its stumbling blocks.

The biggest issue for me when developing these acceptance tests for WP Offload S3 -and Jeff (who’s now writing the tests for WP Migrate DB Pro) will attest to- is the incredibly slow feedback loop. Our shortest scripts take a couple of minutes to run, but some of the longer ones that have extensive interaction with Amazon S3 can take 30 minutes to complete all their scenarios. We’ll probably improve this by breaking up the tests further in the future; but when the reason for writing the tests is to have something that runs once at the end of a release cycle and occasionally in the background during that cycle, there’s no great impetus to break up the scripts too much. Besides, while developing the tests I had plenty time to respond to some support requests, review some pull requests by the rest of the team, or generally cause trouble in the GitHub issues or on Slack.

Because I was tasked to write the base tests while the other two WP Offload S3 team members cracked on with new releases there were occasions when development would legitimately break the acceptance tests because the plugin had changed. This was pretty painful for me, but now that we’re at the point where the whole team is responsible for keeping the acceptance tests happy, each developer needs to update the tests when they make changes that could break them. Each developer should now add test scripts for new functionality too.

One problem I recently found with the current Docker setup is that I made it too secure and relied on Docker for macOS’s (and I suspect Window’s) ability to translate writes to volume mounts from within the container to the running user’s own file ownership and permissions. When I tried running our tests on Linux it failed to set up the website or install the npm or composer requirements as it could not write to the mount point as user “www-data”. I expect I’ll use the same method as the Docker based Devilbox to overcome this and use a .env file to allow the specification of the user’s UID and GID. In fact, at some point it might be worth pulling in and extending Devilbox with our containers so that each developer can easily run the acceptance tests with a different combination of web server and PHP version specified in the .env file, wouldn’t take much effort.

Is It Helping?

Oh yes, automating acceptance testing is most definitely helping reduce the pain of testing a release.

At the moment 75% of the tests we used to run manually for Amazon Web Services and WP Offload S3 Lite are covered by automated acceptance tests. 🎉

That’s a huge win for us. Eliminating the need to have to manually step through all those tests not only saves time when testing WP Offload S3 Lite, but as most of the tests have to be re-run for WP Offload S3 and WP Offload S3 Assets, we save time for them too. We’re saving at least a couple of days’ worth of testing per developer per release (though probably more). That’s four to six days of brain-numbing testing saved on every release!

Automated acceptance tests are also more thorough than the manual tests we had. Our acceptance tests have caught a good number of existing bugs, but, possibly even more helpful, new bugs have already been squashed on recent releases that we’d have struggled to pick up manually.

Both our WP Offload S3 and WP Migrate DB Pro teams are much happier now, knowing that the most tedious tests are now handled by the automated tests.

Please Sir I Want Some More!

Did this behind-the-scenes look of how we’ve improved our release testing by automating a chunk of it pique your interest in automated acceptance testing? If so, check out this article on Automated Acceptance Testing for WooCommerce. Would you like to see more on this, maybe some tutorials? Let me know in the comments.

How are you running tests on your projects? Please share your tips and tricks in the comments too, we’d love to hear how it’s worked out for you.

Source: https://deliciousbrains.com/how-were-automating-acceptance-testing/