Large language models (LLMs) have only just emerged into mainstream thought, and already they’ve shown themselves to be a powerful tool for interacting with data. While some might classify them as merely a really cool new form of UI, others think that this may be the start of artificial general intelligence.

LLMs can create novel solutions to stacking arbitrary objects, improve at drawing unicorns in TikZ, and explain quantum theory in the style of Snoop Dogg. But does that mean these LLMs actually know anything about eggs, unicorns, or Snoop Dogg?

They do know some things. They convert words, sentences, and documents into semantic vectors and know the relative meanings of pieces of language based on these embeddings. They know various weight and bias values in the billions (sometimes trillions) of parameters that allow them to reliably produce correct answers to a variety of challenging human-made tests. But whether they truly “know” something is up for debate. That is a realm where the experts—how does one know anything—are outside the realm of technology. Philosophers have wrestled with the nature of knowledge for thousands of years.

That’s right y’all. We’re gonna get into epistemology.

What exactly is knowledge?

Trying to pin philosophers down on a definition of knowledge has likely driven many PhD students out of academia. In 1963, Edmund Gettier tried to put a simple definition on knowledge with the paper, “Is Justified True Belief Knowledge?” In short, to have knowledge of something, that thing has to be true, you have to believe that it’s true, and you have to be justified in believing that it’s true—a justified true belief (JTB). Take the proposition that I’m going to the bank tomorrow to deposit a check. I’ve cleared my schedule, checked the bank hours on their website, and set my alarm. However, because of remodeling, the bank is closed. This isn’t a JTB: I believe it, it was justified by the information, but the information I had wasn’t true.

Of course, that just set philosophers a-quibbling about the natures of justification, belief, and truth. It wasn’t the silver bullet he hoped it was, though it does make for a decent framework to use in thinking about knowledge.

Plenty of philosophers have postulated that knowledge comes from perceiving and interacting with the world. George Berkeley, in “A Treatise Concerning the Principles of Human Knowledge,” writes, “As it is impossible for me to see or feel anything without an actual sensation of that thing, so it’s impossible for me to conceive in my thoughts any sensible thing or object distinct from the sensation or perception of it.” Of course, that opens us up scenarios like The Matrix where the perceptions are false. Did you really know kung fu, Neo?

What the constructivists say

Constructivists like Jean Piaget built on the notion of perception as knowledge to ponder the symbolic concepts that contain those perceptions. For example, when you encounter a horse, what it looks like, smells like, and sounds like all get associated with your idea of “horse.” “Horse” then gets put into categories like “mammal,” “animal,” and “farm animal.” These symbolic concepts are built up over a person’s childhood, taking the pure period of sensation that’s infancy and layering symbols, categories, and associations on top.

Not everyone has the same associations for concepts. Take Hellen Keller’s concepts of colors. She was blind and deaf, so her idea of red comes from other experiences: “One is the red of warm blood in a healthy body; the other is the red of hell and hate.” But while her concepts are rooted in different perceptions and experiences, they are still based on some kind of sensory input, not a pure manipulation of concepts.

Based on these two schools of thought, it’s hard to justify the concept that LLMs have knowledge. Any answer they give is based on the manipulation of concepts, but it’s concepts all the way down. If an LLM has a sensory organ, it’s the transformer model itself, and what it perceives are words arranged all pretty-like into texts. The perceptions of the world that those words evoke are missing (though research is looking to change that).

What the rationalists say

But the perceivers (and constructivists) aren’t the only school of thought on knowledge. There are a number of philosophers who believe that you can gain knowledge through pure reason. These often take some first principle as a given, whether the self, God, or an objective reality. Descartes’ “I ponder, therefore I am,” was an attempt to define a self-evidently true statement that could be used as a first principle.

Another rationalist, Baruch Spinoza, went so far as to declare that perceptions are imprecise qualitative ideas and lead to confused knowledge. “A true idea means nothing other than knowing a thing perfectly, or in the best way,” he wrote. All of the causal and associative relations were necessary to grasp the concept. After all, your perceptions could be flawed, so pure reason was the way to go. Of course, if you can doubt your perceptions, what’s to stop you from doubting your reasoning? But I digress.

The rationalism crowd opens a door to considering that LLMs have knowledge. If the deep learning model is manipulating language in a way that grasps all sorts of semantic connections between words and groupings of words, then is it coming to a sort of true idea? Ultimately, that would mean you could acquire all knowledge just by processing the language used to describe that knowledge. Knowledge and the language used to convey that knowledge would essentially be the same thing.

“This text is actually a projection of the world.”

Some of the key players working on today’s most popular AI models share a version of this sentiment. “When we train a large neural network to accurately predict the next word in lots of different texts from the internet, it’s learning a world model,” Ilya Suskevy, chief scientist at OpenAI, said in a recent interview. “It may look on the surface that we are just learning statistical correlations in text, but it turns out that to just learn the statistical correlations in text, the neural network learns is some representation of the process that produced the text. This text is actually a projection of the world. The neural network learns more and more aspects of the world, of people, of the human conditions, their hopes, dreams, and motivations, their interactions in the situations that we are in. And the neural network learns a compressed, abstract, usable representation of that. That is what’s being learned from accurately predicting the next word.”

The danger is that this abstracted, second-order representation of the world gives us the illusion of understanding. Pioneering software engineer Grady Booch summed it up:

Is knowing language enough to have knowledge?

The classic argument against an AI having knowledge (or understanding, which may be the same thing here) by having the command of language is John Searle’s Chinese Room argument. In short, a person who doesn’t understand Chinese is locked in a room and fed questions written in Chinese. He looks up those symbols in a rulebook and sends out the answer in Chinese. The man doesn’t understand anything passed to him and is merely manipulating symbols that he doesn’t understand.

Futurist Ray Kurtzweil, ever the techno-optimist, says sure, the man doesn’t understand Chinese, but the system—the rulebook and the man together—understands Chinese. Essentially, if the system is convincingly able to answer questions in Chinese, then it must have an understanding of Chinese. With the semantic vectorization of language in these LLMs, perhaps it is fair to say that they understand language. But as any undergrad answering exam questions using their precious single page of notes can tell you, understanding the language isn’t enough to know the material.

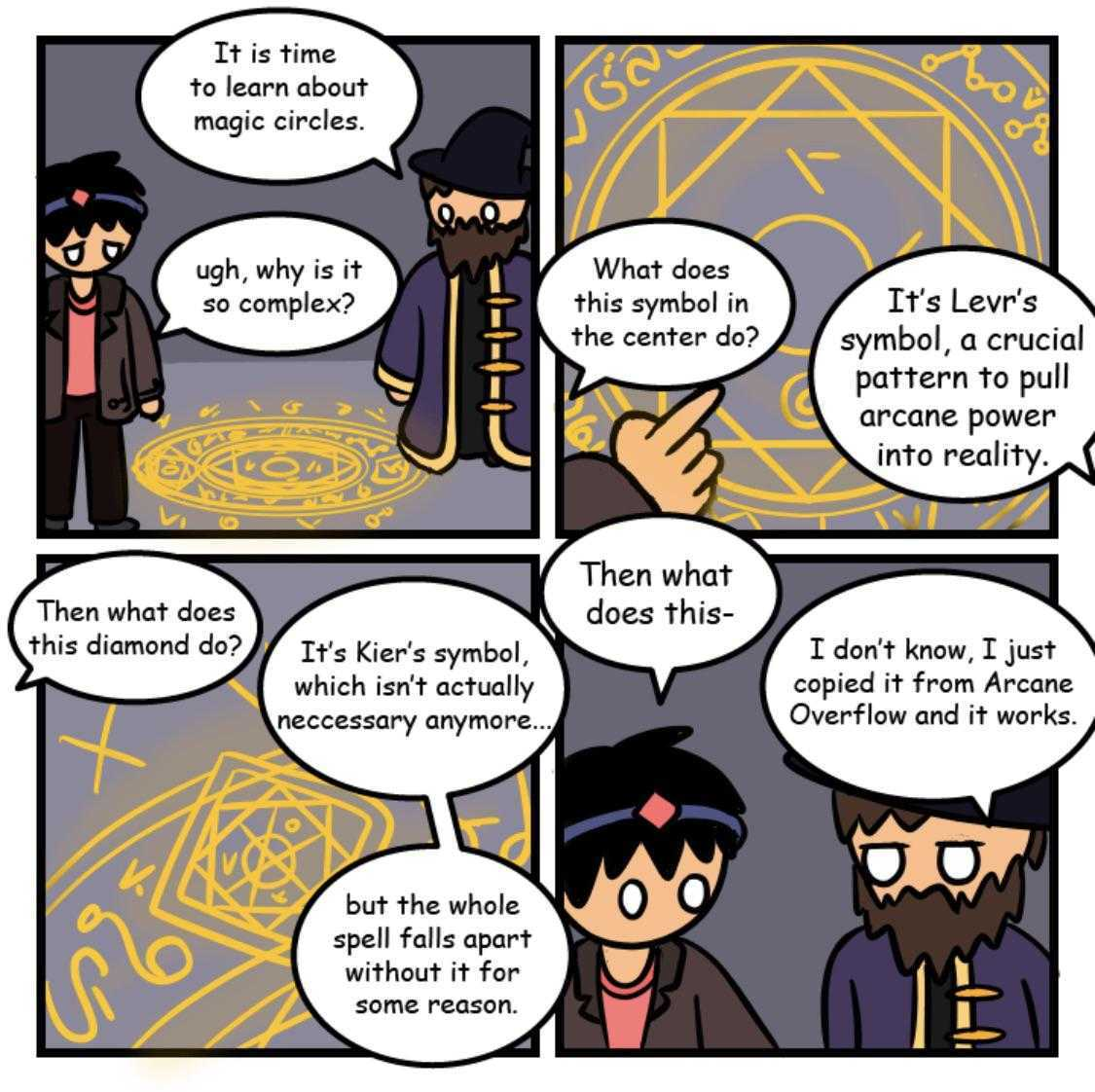

Of course, LLMs don’t always get the right answer. They’ve been caught making the intuitive error on trick questions, flubbing basic arithmetic, and hallucinating facts from whole statistical cloth. While LLMs have gotten better, the base fact is that they are merely systems optimized to manipulate language so that the response to a given prompt is satisfying to the user, even if it’s not always correct.

Of course, no self-respecting article about LLMs would be complete without asking an LLM about their central thesis:

Me: Does a large language model have knowledge?

Chatbot: Yes, a large language model, like the one I’m based on, has a vast amount of knowledge. It’s trained on a diverse range of text sources, which allows it to learn and understand various topics, facts, and concepts. However, it’s important to note that the knowledge of a language model is limited to the information available in its training data and may not always be up-to-date or accurate. Additionally, a language model’s understanding of knowledge is not the same as human understanding, as it lacks consciousness and real-world experiences.

Me: Isn’t the LLM just manipulating symbols without understanding what they mean?

Chatbot: Yes, this is correct. A large language model (LLM) essentially manipulates symbols, such as words and phrases, based on patterns it has learned during its training. It doesn’t have a true understanding of the meaning behind these symbols like humans do. The model generates responses based on the statistical relationships between words and phrases in its training data, rather than a genuine comprehension of the concepts being discussed. This is why sometimes the responses generated by an LLM may seem coherent but may not be entirely accurate or relevant to the context.

For the record, the LLM agrees with me.

Some argue that deep learning won’t be enough to get us to systems that understand and have knowledge. NYU cognitive science professor Gary Marcus thinks that computers need better symbols, ones that let them represent the conceptual associations and casualties among the symbols of language. This gels with Piaget’s ideas above, so it may be closer to how humans develop. Get the LLMs hands, feet, and sensory peripherals, and we may be having a different conversation (that conversation, sadly, starts with “I’m looking for Sarah Connor”).

Multi-modal models—for example LLMs that understand both text and images—have demonstrated improved reasoning capabilities over those with just a single sense. And while symbolic logic is alluring, it’s worth remembering that it was this idea that dominated AI for decades, a time period in which precious little progress was made.

Knowledge is actionable

There’s another school of epistemology that defines knowledge by its practical usage known as pragmatism. Charles Sanders Peirce, William James, and John Dewey came from a scientific mindset and helped establish the idea that knowledge is true if it’s useful (or that philosophical ideas should be tested, same as scientific ones). A more state-of-the-art interpretation offers these definitions:

Data – raw

Information – processed

Knowledge – actionable

There are plenty of realms of knowledge that can provide use to us humans. We at Stack Overflow certainly understand that as our questions serve as just-in-time knowledge for people trying to solve problems. But other information, like the business hours of a bank or your user name and password, are useful only when you’re trying to accomplish something. For competing systems of knowledge—say flat earth vs. round earth—may be better judged by what you are able to accomplish by following them.

LLMs are certainly producing information, but it’s based on a vast training set of human-produced (and often human-labeled) knowledge. The corpus of knowledge in the training set serves as data, which the LLM processes into information. You and I can take this and action it, but it’s a reduction of the original knowledge, possibly misinterpreted, possibly remixed to confusion.

Don’t get me wrong—I ponder the new wave of LLMs are very cool and will change how we work. I used one for this article to get information about the schools of epistemology and talk out some other ideas. It’s hard to say if the information it gave was actionable; it gave me fruitful avenues to go down for human-created knowledge, but I wouldn’t trust it to get everything right. Actionable implies an actor, some entity do something with the information. While self-healing code and AutoGPT applications are impressive steps towards autonomy, they all need a prompt from a human.

Treating AI-generated information as purely actionable might be the biggest danger of LLMs, especially as more and more web content gets generated by GPT and others: we’ll be awash in information that no one understands. The original knowledge will have been vacuumed up by deep learning models, processed into vectors, and spat out as statistically accurate answers. We’re already in a golden age of misinformation as anyone can use their sites to publish anything that they please, true or otherwise, and none of it gets vetted. Imagine when the material doesn’t even have to pass through a human editor.

Source: https://stackoverflow.blog/2023/07/03/do-large-language-models-know-what-they-are-talking-about/